-

Dec 20, 2020

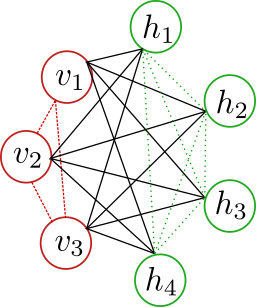

A Boltzmann machine is a type of unsupervised machine learning algorithm that uses a graphical representation, much like a neural network. This architecture expresses the probability distribution in terms of visible and hidden units.

-

Dec 1, 2020

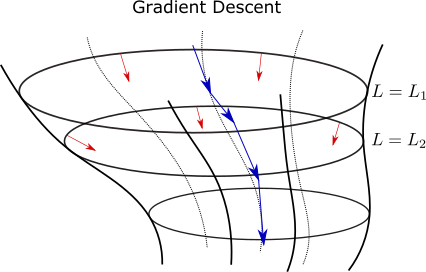

Stochastic gradient descent is an algorithm for online-optimization. Its purpose is to estimate the optimal parameters of the learning hypotheses. Unlike gradient descent, the iterative process requires only a small amount of data at a time, which is very useful for large datasets.

-

Nov 12, 2020

A neural network is a graphical representation of a set of linear and non-linear operations acting on an input data-point. In a feed-forward neural network, we stack several layers sequentially. The input data cross multiple layers, changing its feature's representation along the way. This process allows the creation of very complex predictors.

-

Oct 31, 2020

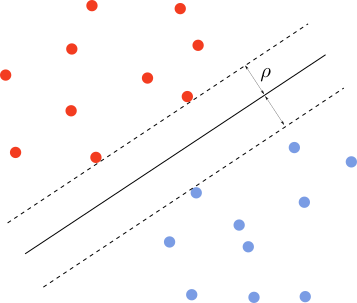

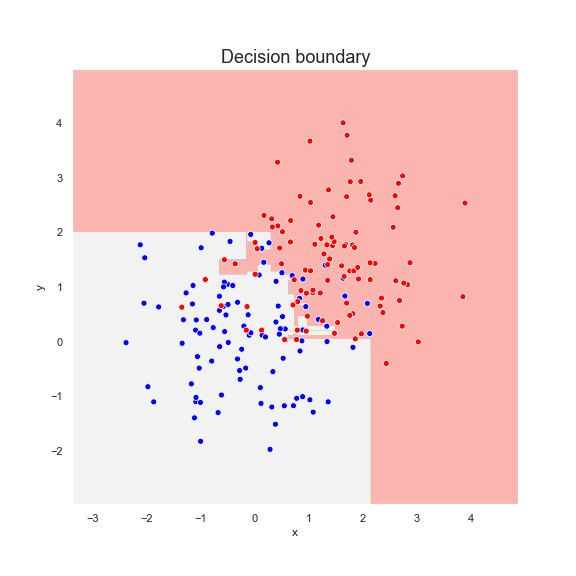

An SVM algorithm learns the data by engineering the optimal separating line/curve between the classes. Compare with the other algorithms which try to do this by first determining the Bayes predictor. The SVM has robust generalization properties. However, they are usually tough to train.

-

Oct 13, 2020

Gradient boosting is another boosting algorithm. It uses gradient descent to minimize the loss function and hence the name. However, unlike in other algorithms, the learning rate is adjusted at every step.