-

Sep 27, 2020

Boosting is an algorithm whereby a set of weak learners is fit sequentially to the data. At each step in AdaBoost, the weak learner focuses on the wrongly classified points.

-

Sep 13, 2020

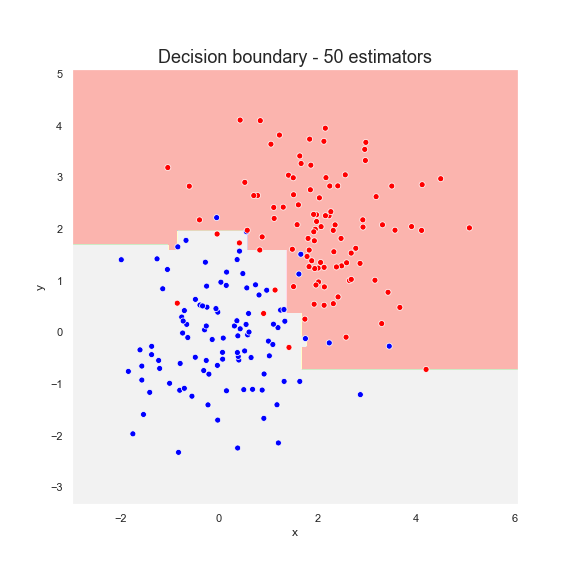

A random forest is an ensemble of decision trees. The trees are fitted in random samples of the training set, preventing overfitting and reducing variance.

-

Sep 1, 2020

The decision tree is one of the most robust algorithms in machine learning. We explain how the algorithm works, the type of decision boundary, and a Python implementation.

-

Aug 20, 2020

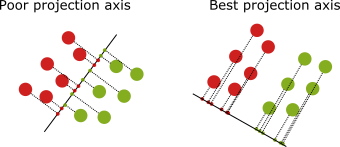

Linear discriminant analysis is an algorithm whereby one fits the data using a Gaussian classifier. LDA can also be used to perform a dimensional reduction of the data. We explain the theory, the dimensionality reduction, as well as a Python implementation from scratch.

-

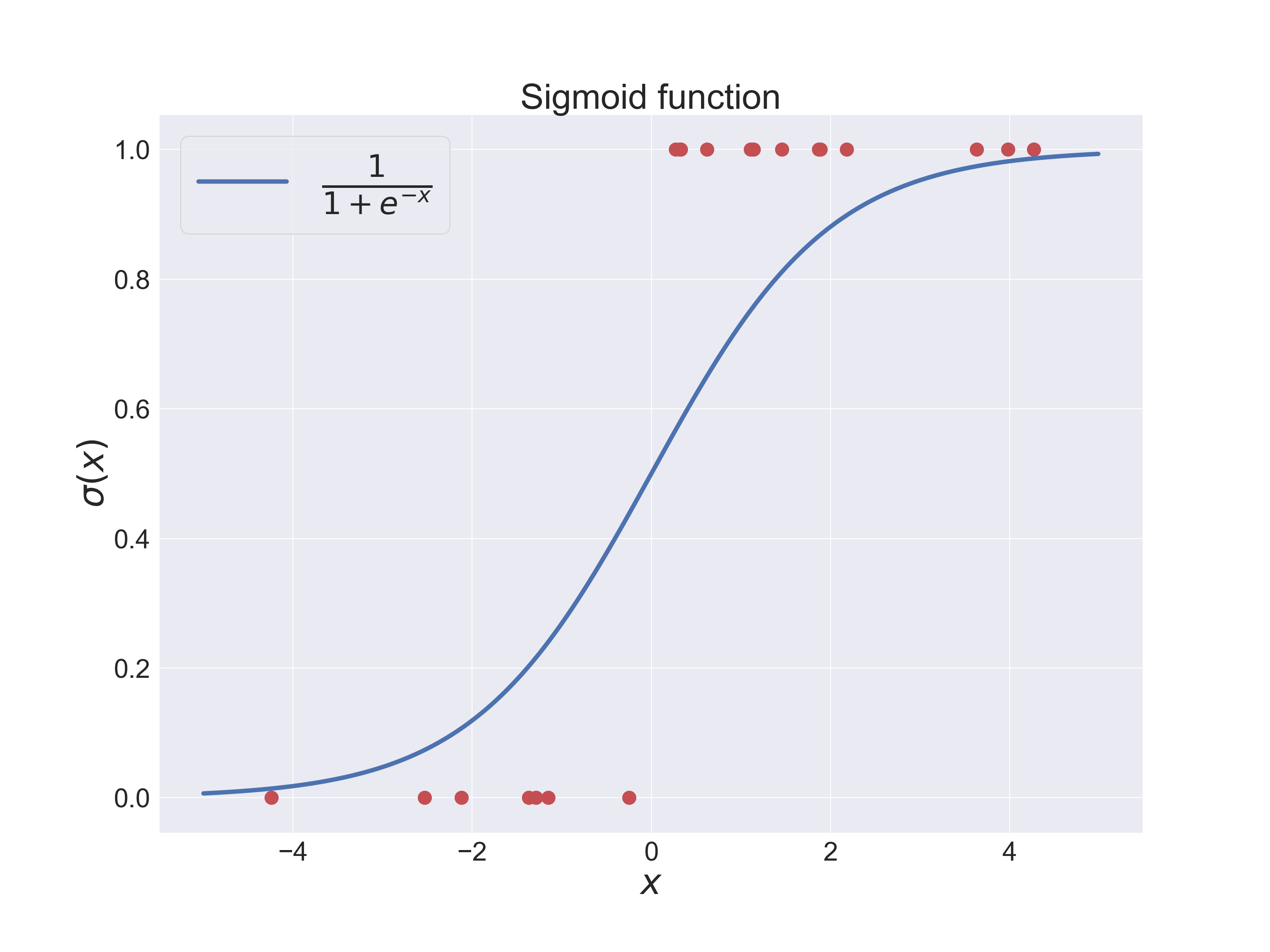

Aug 10, 2020

The logistic regression algorithm is a simple yet robust predictor. It is part of a broader class of algorithms known as neural networks. We explain the theory, a learning algorithm using the Newton-Raphson method, and a Python implementation.