-

May 26, 2020

We address the importance of dimensionality in machine learning.

-

May 5, 2020

We derive Hoeffding's inequality. This is one of the most used results in machine learning theory.

-

May 2, 2020

The Rademacher complexity measures how a hypothesis correlates with noise. This gives a way to evaluate the capacity or complexity of a hypothesis class.

-

May 1, 2020

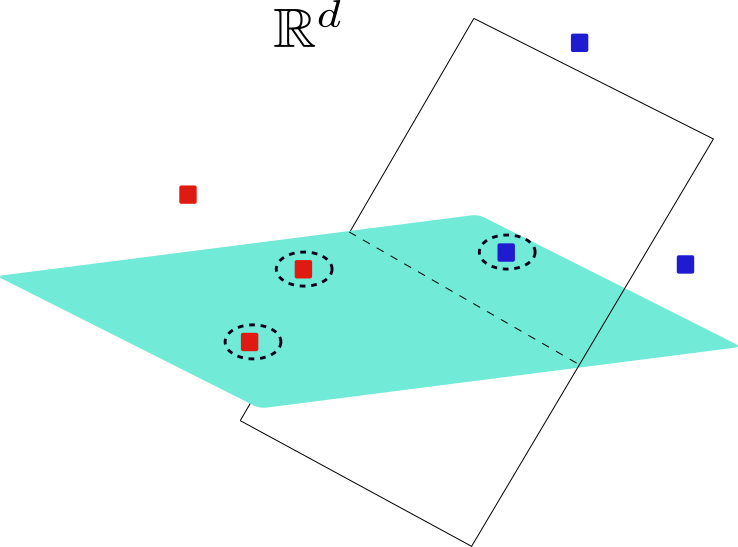

We study binary classification problem in R**d using hyperplanes. We show that the VC dimension is d+1.

-

Apr 30, 2020

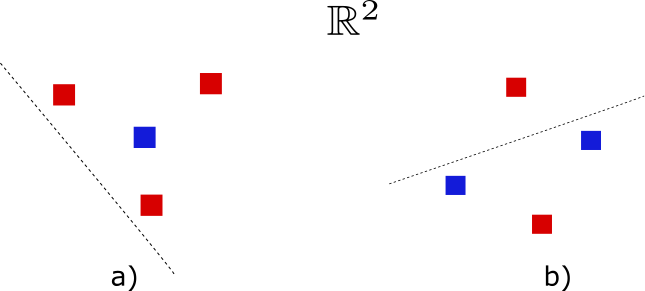

The VC dimension is a fundamental concept in machine learning theory. It gives a measure of complexity based on combinatorial aspects. This concept is used to show how certain infinite hypothesis classes are PAC-learnable. Some of the main ideas are explained: growth function and shattering. I give examples and show how the VC dimension can bound the generalization error.